Google built its own chips to power machine learning algorithms

Perhaps Google thinks that the chips available in the market are unable to handle the needs of its machine learning algorithms because Google today announced at its I/O developer conference that the search giant has been building its own specialized chips to power the machine learning algorithms.

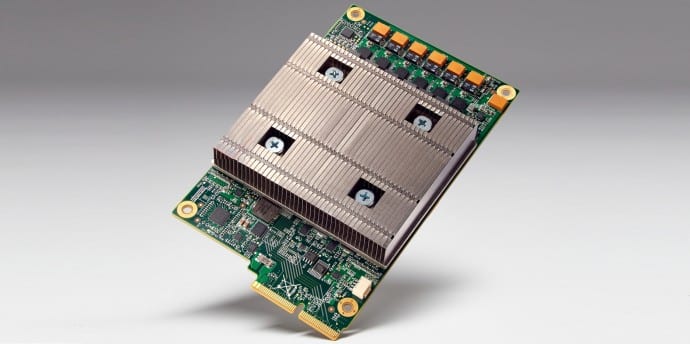

Google has been using the Tensor Processing Units (TPU) custom-built chips to power its data centers according to Google’s senior VP for its technical infrastructure Urs Holzle. Now it wants TPU to power its machine learning algorithms.

Google says it’s getting “an order of magnitude better-optimized performance per watt for machine learning” and argues that this is “roughly equivalent to fast-forwarding technology about seven years into the future.”

Google said that it has managed to speed up the machine learning algorithms with the TPUs because it doesn’t need the high-precision of standard CPUs and GPUs. Instead of 32-bit precision, the algorithms happily run with a reduced precision of 8 bits, so every transaction needs fewer transistors.

You are also using TPU through Google’s voice recognition services. Google’s Cloud Machine Learning services also run on these chips. AlphaGo, which recently beat the Go world champion,also ran on TPUs.

Holzle sadly didn’t want to disclose which foundry is actually making Google’s chips, though he was willing to say that the company is currently using two different revisions in production and that they are manufactured by two different foundries.

With TensorFlow, Google offers its own open source machine learning library and unsurprisingly, the company adopted it to run its algorithms on TPUs.