MIT’s new AI² has false positives detection rate five times smaller than similar cyber-security solutions

MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), together with researchers from security firm PatternEx, have built an artificial intelligence (AI) system that can predict cyberattacks 85 percent of the time.

Known as the AI² , the prediction of the attacks created five times fewer false positives than existing cyberattack spotting AIs.

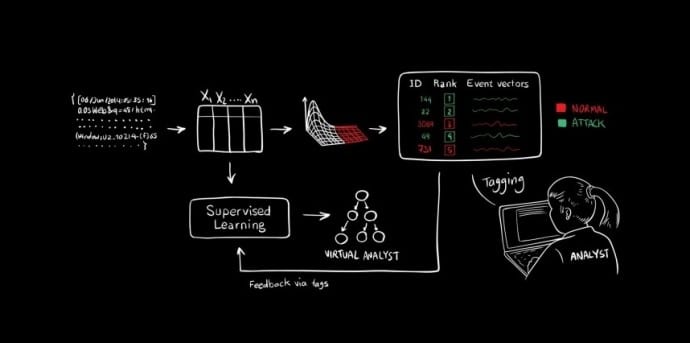

The new system doesn’t depend wholly on AI, but also on user input, something that researchers call analyst intuition (AI), hence its name of AI².

In tests carried out using 3.6 billion log lines of internet activity, AI² was able to identify 85 percent of attacks ahead of time, allowing the system to scan the content with unsupervised machine-learning techniques. The system at the end of each day presents its findings to a human operator, who then confirms or dismisses security alerts.

This human feedback is then incorporated into the learning system of AI² and used the next day for analyzing new logs.

AI² is able to perform better by bringing together three different unsupervised learning models to go through raw data before presenting data to the analyst. So, on day one, that system offers 200 of the most abnormal events to an analyst, who then manually goes through those to identify the real attacks. That information is fed back into the system and even within a few days the unsupervised system is presenting as few as 30 to 40 events for verification.

“These results show that our analyst-in-the-loop security system is an accurate, scalable, and cost-efficient mechanism to successfully defend against ever-changing attacks,” researchers concluded.

CSAIL research scientist Kalyan Veeramachaneni, who developed AI² with former CSAIL postdoc Ignacio Arnaldo, who is now a chief data scientist at PatternEx , says, “The more attacks the system detects, the more analyst feedback it receives, which, in turn, improves the accuracy of future predictions. That human-machine interaction creates a beautiful, cascading effect.”

The best thing about AI² is that, in time, as the AI system gets smarter and is able to find out more and more attack vectors, the human input is not needed as in the beginning.

The AI²: Training a big data machine to defend research paper was presented at last week’s IEEE International Conference on Big Data Security in New York City.