Google New Neural Network With “Superhuman” Can Tell The Location of Almost Any Image

As of today if your image location is revealed only if you have enabled geo-tagging in the camera settings. Not any more, if the new AI works well, Google may soon not need to look into geo-tagging information to know where your photo was taken.

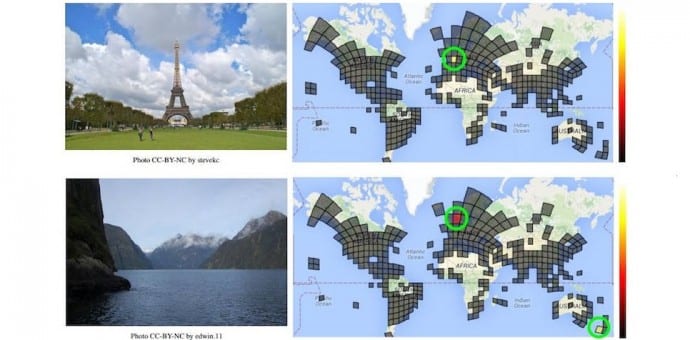

The search giant has developed a new deep-learning machine called PlaNet that is able to tell the location by just analysing the pixels of the photo.

The Google team led by Tobias Weyland, a computer vision scientist at Google, have created a neural network that has been fed with over 91 million geo-tagged images from across the planet to make it capable enough that it can spot patterns and tell the exact location where the image was taken. It can also determine different landscapes, locally typical objects, and even plants and animals in photos.

Google’s PlaNet tool analyses the pixels of the photos and then cross references them with the millions of images it has in its database looking similarities. This task which may seem huge for humans, is completed by PlaNet using only 377MB of space, which is not an issue for Google.

In a trial run in which it was tested with 2.3 million images, PlaNet was able to tell the country of origin with 28.4 percent accuracy, but more interestingly, the continent of origin with 48 percent accuracy. PlaNet is also able to determine the location of about 3.6 percent of images at street-level accuracy and 10.1 percent of city-level accuracy.

The PlaNet is not 100 percent accurate yet but as with all machine languages, it will surely and steadily perfect itself. In fact, PlaNet has beaten humans in many of the tests. The reason, Weyand explains to MIT Tech Review, is that PlaNet has seen more places than any of us have, and has “learned subtle cues of different scenes that are even hard for a well-travelled human to distinguish.”