NVIDIA’s GP100 Pascal GPUs first to feature 4-Hi HBM2 and a 4096-bit memory bus

NVIDIA has officially released their first PCIe HBM2 powered GPU, and in turn defeating its biggest and only rival in the market AMD with the new memory standard. For those that do not know, the NVIDIA P100 is NVIDIA’s first HBM2 PCIe 3 GPU to be officially released, though this GPU will only come as a NVIDIA Tesla model which is designed for workstation use and not gaming (it can still be used for gaming, but look at its price tag, it is certainly not worth the money). This can also mean that AMD will likely become the first company to release the first gaming oriented GPU to use HBM2 memory.

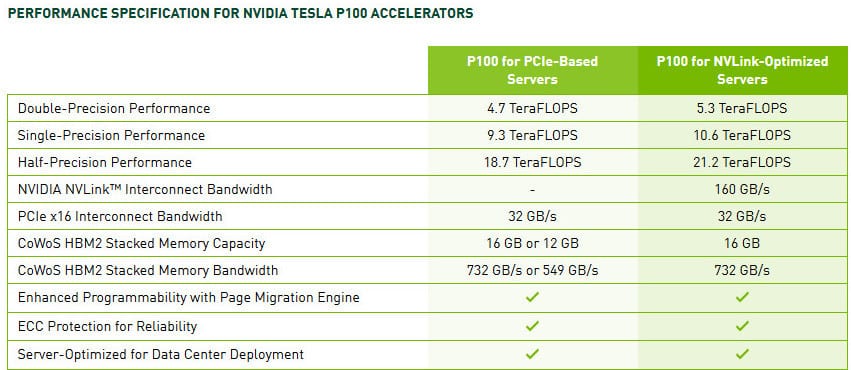

NVIDIA’s P100 GPU will also come with several different models, and will be available in the 16GB and 12GB variants that come with memory bandwidths of 732GB/s and 549GB/s respectively. This means that there will be versions that use four 4GB HBM2 chips while there will be other versions with three active 4GB HBM2 memory chips.

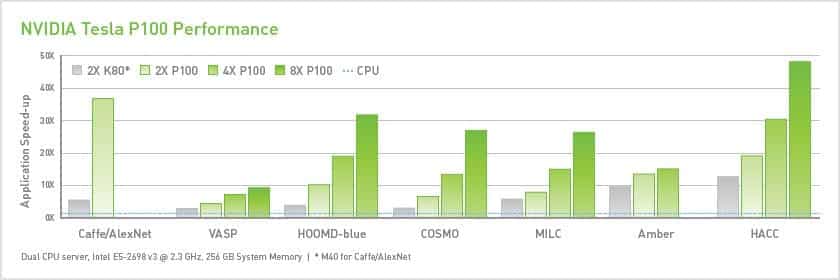

With this new GPU, NVIDIA is showcasing how scalable their new P100 GPUs can be when used with NV-link, which allows systems to scale up to 8 P100 GPUs in a single system. Unfortunately, this new PCIe Tesla P100 will be limited to PCIe bandwidth, and that means that the number of GPUs that can be used on a single server and the bandwidth that is available to them for inter-GPU communications.

However, looking at how scalable the graphics processor is for other tasks, it will definitely be beneficial in the long run.