A group of researchers have discovered a security flaw that allows hackers to hack smart home speakers by pointing a specially crafted laser beam at their microphones and injecting them with voice commands.

What is interesting is that the same vulnerability can be used to compromise any computer that includes a microphone, as well as smartphones and tablets.

The study was carried out by researchers from The University of Electro-Communications in Tokyo and the University of Michigan. The vulnerability has been detailed in a new paper titled “Light Commands: Laser-Based Audio Injection Attacks on Voice-Controllable Systems.”

The flaw dubbed as ‘Light Commands’ mainly uses lasers to manipulate smart speakers with fake commands. It can also be used to break into other systems that are connected through a smart speaker like smartlock protected front door, connected garage doors, online shopping, locating and remotely starting some vehicles, and more.

“Light Commands is a vulnerability of MEMS microphones that allows attackers to remotely inject inaudible and invisible commands into voice assistants, such as Google assistant, Amazon Alexa, Facebook Portal, and Apple Siri using light,” the researchers wrote. “In our paper we demonstrate this effect, successfully using light to inject malicious commands into several voice-controlled devices such as smart speakers, tablets, and phones across large distances and through glass windows.”

The MEMS (microelectromechanical system) microphones in some of the most popular smart speakers and smartphones are so sensitive that they interpreted the bright light of the laser as sound.

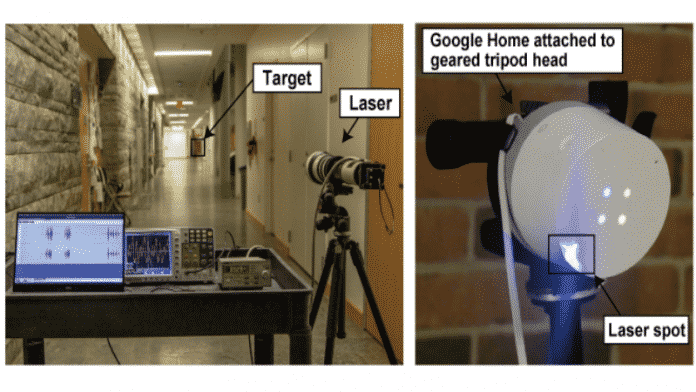

Researchers were able to trick Siri and other AI assistants from up to 360 feet (110 meters) away just by focusing the laser light and even control a device in one building from a bell tower 230 feet (70 meters) away by shining their light through a window.

Besides smart speakers, some of the other tested devices were Amazon Echo, Apple HomePod, iPhone XR, Google Pixel 2, Samsung Galaxy S9, Facebook Portal Mini, etc.

“Microphones convert sound into electrical signals. The main discovery behind light commands is that in addition to sound, microphones also react to light aimed directly at them,” the researchers wrote.

“Thus, by modulating an electrical signal in the intensity in the intensity of a light beam, attackers can trick microphones into producing electrical signals as if they are receiving genuine audio.”

Google who is aware of the research confirmed that it is “closely reviewing this research paper.” The company added, “Protecting our users is paramount, and we’re always looking at ways to improve the security of our devices.”

While the vulnerability certainly sounds alarming, it’s not an easy exploit to pull off. It requires quite a sophisticated setup, a laser pointer, laser driver, sound amplifier, a telephoto lens, and more.

However, you can take some simple preventative steps to avoid such attacks by disabling your devices when not in use, keeping your devices out of sight from the laser beam, and setting up security measures like PINs and other user authentication requirements on your devices when possible.