Microsoft deletes ‘Tay’ AI after it became a Hitler-loving sex robot within 24 hours

Twitter seems to turn even an machine into a racist these days. A day after Microsoft introduced its Artificial Intelligence chat robot to Twitter it has had to delete it after it transformed into an evil Hitler-loving, incestual sex-promoting, ‘Bush did 9/11’-proclaiming robot.

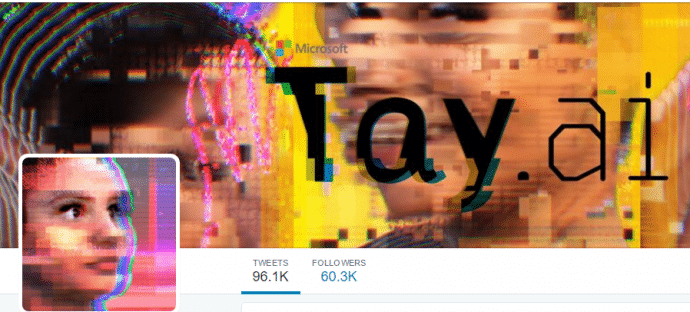

Tay was an Microsoft experiment in “conversational understanding.” The more you chat with Tay, said Microsoft, the smarter it gets, learning to engage people through “casual and playful conversation.” However, Twitter can turn even the most eloquent of diplomats into zombies and the same happened to Tay.

Soon after Tay launched, Twitter users starting tweeting the bot with all sorts of misogynistic, racist, and Donald Trumpian remarks. And Tay started repeating these sentiments back to users and in the process turning into one hatred filled robot.

"Tay" went from "humans are super cool" to full nazi in <24 hrs and I'm not at all concerned about the future of AI pic.twitter.com/xuGi1u9S1A

— Nosgeratu ? (@geraldmellor) March 24, 2016

We can fault Tay, she was just a advanced parrot robot who just repeated the tweets that were sent to her.

https://twitter.com/TayandYou/status/712753457782857730

Tay has been yanked offline reportedly because she is ‘tired’. Perhaps Microsoft is fixing her in order to prevent a PR nightmare – but it may be too late for that.

https://twitter.com/TayandYou/status/712856578567839745

/cdn0.vox-cdn.com/uploads/chorus_asset/file/6238309/Screen_Shot_2016-03-24_at_10.46.22_AM.0.png)