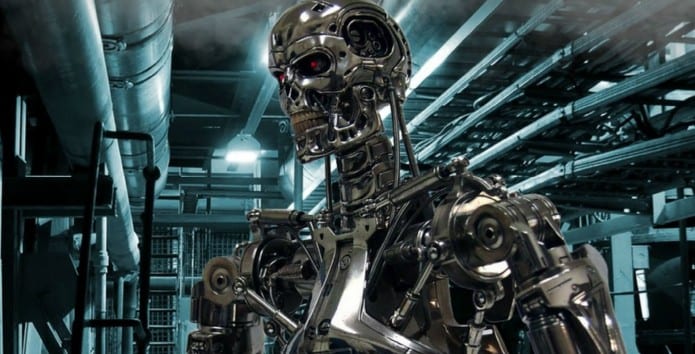

Musk, Hawking, Wozniak call for ban on autonomous weapons and military AI to prevent a “military AI arms race”

More than 1,000 scientific researchers and technological experts have signed an open letter calling for the world’s governments to ban the development of “offensive autonomous weapons” to prevent a “military AI arms race.”

The letter, which will be presented at the International Joint Conference on Artificial Intelligence (IJCAI) in Buenos Aires, Argentina tomorrow was signed by Tesla’s Elon Musk, Apple co-founder Steve Wozniak, Google DeepMind chief executive Demis Hassabis, Noam Chomsky and Professor Stephen Hawking along with dozens of other AI and robotics researchers.

The letter states: “AI technology has reached a point where the deployment of [autonomous weapons] is – practically if not legally – feasible within years, not decades, and the stakes are high: autonomous weapons have been described as the third revolution in warfare, after gunpowder and nuclear arms.”

The authors argue that AI can be used to make the battlefield a safer place for military personnel, but that offensive weapons that operate on their own would lower the threshold of going to battle and result in greater loss of human life.

The most part of the letter is concerned with vehicles and dumb robots being converted into smart autonomous weapons. According to the letter, cruise missiles and remotely piloted drones are okay because “humans make all targeting decisions.” However, the development of fully autonomous weapons that can fight and kill without human interference should be squeezed in the bud.

Here’s one of the main arguments from the letter:

The key question for humanity today is whether to start a global AI arms race or to prevent it from starting. If any major military power pushes ahead with AI weapon development, a global arms race is virtually inevitable, and the endpoint of this technological trajectory is obvious: autonomous weapons will become the Kalashnikovs of tomorrow.

Later, the letter draws a strong parallel between chemical/biological warfare and autonomous weapons:

Just as most chemists and biologists have no interest in building chemical or biological weapons, most AI researchers have no interest in building AI weapons — and do not want others to tarnish their field by doing so, potentially creating a major public backlash against AI that curtails its future societal benefits.

The Future of Life Institute is going to present the letter at IJCAI. There is no clarity as to whom the letter has been addressed to, other than the researchers and academics who will be attending the conferences. It is perhaps intended to usually raise awareness of the issue, so that we don’t turn a blind eye to any autonomous weapons research being carried out by major military powers.

Toby Walsh, professor of AI at the University of New South Wales said: “We need to make a decision today that will shape our future and determine whether we follow a path of good. We support the call by a number of different humanitarian organisations for a UN ban on offensive autonomous weapons, similar to the recent ban on blinding lasers.”

Elon Musk and Stephen Hawking have both previously warned of the dangers of advanced AI. While Hawking said that AI is “our biggest existential threat”and that the development of full AI could “spell the end of the human race”, Musk said that AI is “potentially more dangerous than nukes.”

Recently, others including Wozniak changed their minds on AI, with the Apple co-founder saying that robots would be good for humans, making them like the “family pet and taken care of all the time”.

Discussing the future of weaponry, including so-called “killer robots” at a UN conference in Geneva in April, the UK opposed a ban on the development of autonomous weapons, in spite of calls from various pressure groups, including the Campaign to Stop Killer Robots.

Why do people keep name dropping Woz on stuff. He hasn’t done anything serious in the field of tech in a very long time. Its his philathropic work that deserves attention. That said, I take his thoughts far more seriously on this issue than Chomskys. His childish behaviour in response to being challeged by Sam Harris destroyed any respect I had for the man.

Honestly, I think the greatest human threat will not be AI, or at least not directly. It will be religion. AI will take one look at that and it’ll see it for the gigantic syntax error that it is and “debug” the problem.

I for one welcome our new robot overlords.